CW: This article will discuss child exploitation, sexual violence, and violations of consent

Artificial Intelligence is one of those concepts that can evoke both wonder and anxiety. The idea that a computer can learn and grow is mindblowing, but anyone who has ever seen a scifi movie will tell you that there are some major concerns to consider.

That has not stopped AI generated art from becoming one of the fastest growing trends on the internet. While there is plenty of controversy surrounding this new wave of technology, one question is on the forefront of everyone’s minds…

“How can I use this revolutionary technology to make porn?”

For better or worse, AI porn is here to stay and there are some serious issues we need to address.

This discussion may feel like the horniest episode of Star Trek: The Next Generation, but from stolen faces to truly unsettling creations, there is a lot to worry about with AI porn.

What is AI Art, anyway?

In the briefest explanation possible, AI generated art, using programming models such as Stable Diffusion, is created by a computer program with human input. This is done with either a written description, an image as a base, or both.

For example, if you type in “a cat wearing suspenders and glasses working at an office” then the computer spits out something like this:

Pretty crazy, right?

The more detailed your prompt is, using a combination of keywords, the crazier it gets. Whatever you can imagine, the programs can give it to you.

How does this all work? First off, what we are seeing is not AI in the way most people think about it. Generative AI programs like the popular Midjourney are using machine learning, meaning they train with visual information and learn what words connect to specific images.

The program takes an image, associated with a keyword or phrase, adds visual noise, then rebuilds the image using what it has learned.

The process is like Deepfakes, but instead of training with a person’s face, it uses every image on the internet.

The data set that popular AI programs are based on, LAION 5b, has almost 6 billion images to pull from.

More impressive than the tech itself is how quickly it has advanced. Some may remember hearing about DALL-E, one of the earliest versions of Stable Diffusion, back in spring of 2022.

DALL-E was pretty impressive when making simple images, but it only took a few months before it was able to make photoreal and complex pictures from text in seconds.

You want Kermit the Frog as a character in Bladerunner 2049? DALL-E can do that:

Insane stuff.

By December of 2022, Instagram was flooded with Stable Diffusion portraits made by the Lensa AI app. That is when people started getting worried about this new cyber marvel.

Stolen Art and AI Bewbs

Any breakthrough in technology is bound to raise concerns over its creation and use. Stable Diffusion is no different.

Some of the biggest controversies come from artists who claim that programs that use Stable Diffusion steal their original work and repackage it.

This debate over art copyrights is going to court as of this writing, with professional artists saying this use of their work falls under the Derivative Work section of copyright protection laws and should not be considered Fair Use.

It would be like Disney suing over a new movie about a genius, billionaire, philanthropist playboy in robot armor named Titanium Man.

Besides the battle over usage rights, people who used the Lensa AI app to get some likes on social media also have a bone to pick with AI art.

Specifically, how horny it seems to be.

Female users noticed that the app not only gave them an ample rack, but in some cases it would render an image of them topless. Most Stable Diffusion apps have restrictions to block NSFW creations, but Lensa AI seemed to be skipping that step on its own.

Women who were not given a digital boob-job had issues, too. Many ladies who tried the app were shocked at how many of the results were overly sexualized and scantily clad. Olivia Snow, a writer for Wired, said these results showed up even when she used pictures of herself as a child.

Oh dear.

Lensa AI and its parent company Prisma Labs say they are working on strengthening the adult content filters, but admit there is only so much they can do.

So if most apps using Stable Diffusion have filters to block nudity and other adult prompts from being used, then why is anyone worried about AI porn?

Because where there is a boner, there is a way.

Un-Stable Diffusion – Finding Ways to Make AI Porn

As mentioned, most web-based apps have safeguards in place to block pornographic or disturbing content, but there are numerous ways around them.

First is whether or not someone is using a web-based or local app. If you have a beefy enough computer, you can run any version of Stable Diffusion at home and away from companies that have restrictions in place.

Another work-around is being very selective with your prompts.

Using “nude” instead of “naked” or “breasts” in place of “tits” may help you fly under the radar.

Some web-based apps like Mage.Space allow NSFW prompts for a price, while others like Dezgo and Stable Horde let users go wild for free. There are even platforms dedicated to creating porn images, like Pornpen.ai.

That being said, the results are not always perfect. In fact, sometimes they are downright haunting. Sometimes the person in the generated image has three arms, or a leg where their penis should be, or worse.

Think of David Cronenberg meets Pornhub and you get the idea.

When used to make porn, any method of generative AI comes with a variety of concerns. Here are a few examples:

1. Text to Image AI Porn Prompts

This is like the professional kitty shown earlier, where all you need to do is type in what you want to see as a prompt and the goblins in cyberspace try to give it to you.

If I enter the prompt “topless muscular redhead goth milf with tattoos in a graveyard, foggy night, photoreal, 4k” then I get this:

The program seems to have created this woman from thin air, but now that we know how Stable Diffusion works, we can bet that our spooky sexy lady was built from different parts of real people.

Stable Diffusion uses a lot of images featuring porn actors for nudes like this, so do they get a cut from companies profiting off their bodies?

It is similar to the problems visual artists are having with AI generated art, where the programs take actors’ bodies and repurpose them without their knowledge or consent. Those are someone’s tits that they use to put food on the table and that person deserves compensation.

The best Onlyfans accounts are only going to carry on if their images aren’t stolen for AI use.

Speaking of actors, we now need to talk about faking nudes.

Fake celebrity nudes have been around as long as people have been able to edit photos. The big difference now is what once took a lot of time and skill is as easy as typing a few words and a click.

Here is Obi-Wan himself, Ewan McGregor, after I cropped out “his” lightsaber.

While the computer may not nail the face every time, some of the results are damn close.

As Stable Diffusion advances, it isn’t long before anyone can create fake celeb nudes or photos of them performing sex acts that are indistinguishable from the real thing. The more public the face, the more generative AI models like Stable Diffusion can learn.

That sucks for any public figure, having lost all control over their image and body, but us non-celebrities out here shouldn’t be worried, right?

2. Image to Image AI Porn Prompts

As the Lensa AI fiasco showed, an everyday person could stumble into creating a fake nude of themselves with no effort.

So, what is to stop someone else from whipping up a batch of spicy pics featuring you?

For most AI portrait apps, the user needs to upload dozens of pictures of themselves. Thanks to social media, anyone who can view your profile can also upload dozens of your pics for their unsavory use.

That creepy guy who follows you on Facebook can play with prompts and settings to have you act as a puppet in their sickest fantasies.

Assuming all the creeper-in-question wants is a nude and not a full porn scene, there is an even more unnerving method that only requires one photo.

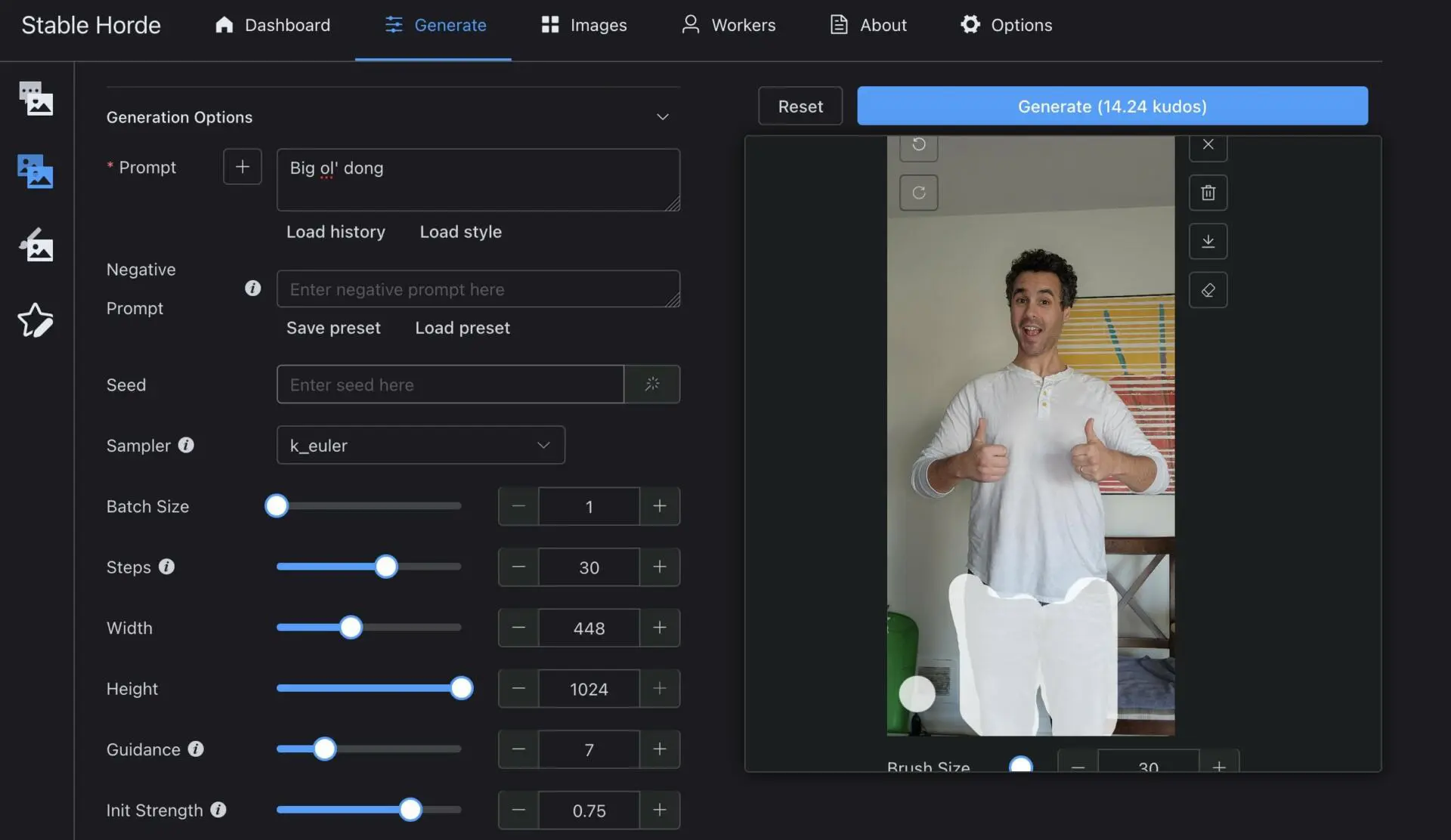

Many image-to-image apps have a tool called “inpainting”, where the user blocks out a specific part of an image for the generative AI model to do its thing and leaves the rest untouched.

It doesn’t take a strong imagination to see how this could be used.

Someone could use a single pic to make their fake nudes without inpainting, but it would take a lot of trial and error to get the desired result.

With inpainting, it is as simple as drawing over the clothes and typing “nude” in the prompt.

Me personally, I tried this technique to give myself a big ‘ole dong:

Concerns over revenge porn, where nude photos or videos are shared without the subject’s consent, have been raised. Much like when Deepfakes hit the scene, there is little legal recourse that can be taken by the victim.

If someone is the victim of revenge porn, they have to use the DMCA takedown system to have the material removed. The problem is that in order to do that the victim needs to claim ownership of the material, which they do because it is a picture of them.

But what about revenge porn made via Stable Diffusion? Who is technically the owner?

There is no actual law about this, but if new laws about AI revenge porn are modeled after copyright infringement cases, then a lot of it might be totally legal. If a work is changed enough for a different purpose, i.e. movie reviews or parodies, then it could fall under Fair Use.

It is too early to say how courts will handle this, but the possibility of a new form of legal revenge porn being legal is heart-breaking.

Sadly, as bad as these scenarios are, there are way worse ones.

Strap in, this is about to get dark.

Ugh, You Knew This Was Coming – AI Porn & Predators

Looking back, maybe creating a program that can create images of anything you can imagine and letting the internet use it was a bad idea.

When platforms that use generative models like Stable Diffusion with no restrictions, predators will take full advantage. This opens the door to horrifying images of rape, mutilation, and child exploitation being created by the thousands.

As mentioned above, it wouldn’t take much for someone to make a convincing depiction of a celebrity, friend, or ex being sexually assaulted and violently murdered.

Even if someone is not using a real person to put in their violent rape fantasy, the fact that it is so easily generated is nauseating.

There is no judgment towards adults who engage in consensual role playing of rape or incest scenarios with each other, but there are children who can use these apps to make truly disturbing and mentally damaging content.

Remember being in middle school and seeing who can top their friends with the sickest and darkest joke?

Now kids, without any effort, can make hundreds of intensely gross and disturbing images to one-up each other.

The issue is not solely related to photorealistic images. Part of the fun of generative AI is playing with different styles of art, like the extremely popular anime look.

Not to dunk on anime fans, but there is already an issue in that fandom around child-like girls being sexualized.

Yeah, yeah, she’s actually a 300 year-old fairy spirit or whatever but that doesn’t change the fact that she looks thirteen.

There is a massive amount of AI generated “Loli” media out there now. If you don’t know what “Loli” is, congrats and I envy you.

Loli is a play on the idea of Lolita, where young, like tweens or younger, anime-style girls are depicted as sexually aware and mature objects. Lolicons, those who enjoy Loli art, in the United States have had to be careful in the past with this stuff.

The legality here is murky at best.

Different states have different laws when it comes to virtual child porn, but on the federal level Loli is only illegal if two conditions are met.

- The depiction of a minor lacks serious artistic value and is obscene.

- The Loli material was transmitted through the mail, internet, or common carrier; the material crossed state lines; there is an intention to sell

First of all, what does the Supreme Court consider a non-obscene image of a sexualized child?

If a Lolicon created their own Loli content, then that is typically legal. Considering how slow laws are to change for the better and how fast generative AI is advancing, there is no concrete answer to the legality of AI-made Loli.

For that matter, the same rules apply to non-cartoon child porn created by Stable Diffusion. Some cretin could spend a whole weekend generating gigabytes worth of photorealistic child exploitation and never hear a single FBI siren.

Another nightmarish reality is the fact that a person could use this tech to make convincing images of another person engaging in criminal behavior.

Mark my words, any day now there will be some sack of shit like Matt Walsh or Chaya Raichick will be screeching about photographic evidence that Drag Queens abuse children thanks to generative AI.

It doesn’t matter if the images are proven to be 100% fake, like everything else right-wing grifters have said about supposed “groomers”, because their followers will buy it hook line and sinker.

There is a reason groundbreaking technology is compared to the discovery of fire. Advancements like Stable Diffusion can be a world changing tool or burn everything to the ground.

Generate Some Actual Intelligence

Generative AI porn made possible by programs like Midjourney and Stable Diffusion has a lot of problems, but that does not mean we need to ban or destroy it.

Newer versions of generative AI models, like Stable Diffusion 2.0, are doing the best they can to discourage the programs from being abused with enhanced filters and other features. In their defense, there is only so much they can do.

People are free to express themselves and create whatever their hearts desire. Unfortunately, some hearts hold a circus of horrors. AI generated media may be groundbreaking, but how people use new technology has always been the same: weaponize or jerk off to it.

Like the internet itself, AI generated art is an immensely powerful tool that is both awe inspiring and terrifying. No matter how specific your desires are, you can make those dreams a reality with AI porn.

There is no reason to not use it to improve your life or just have fun, but we as a society need to come to terms with how it can be used to harm others and be smarter than the technology we create.

Leave a Reply